AI

OpenAI’s chilling AI bioweapons warning will haunt your dreams

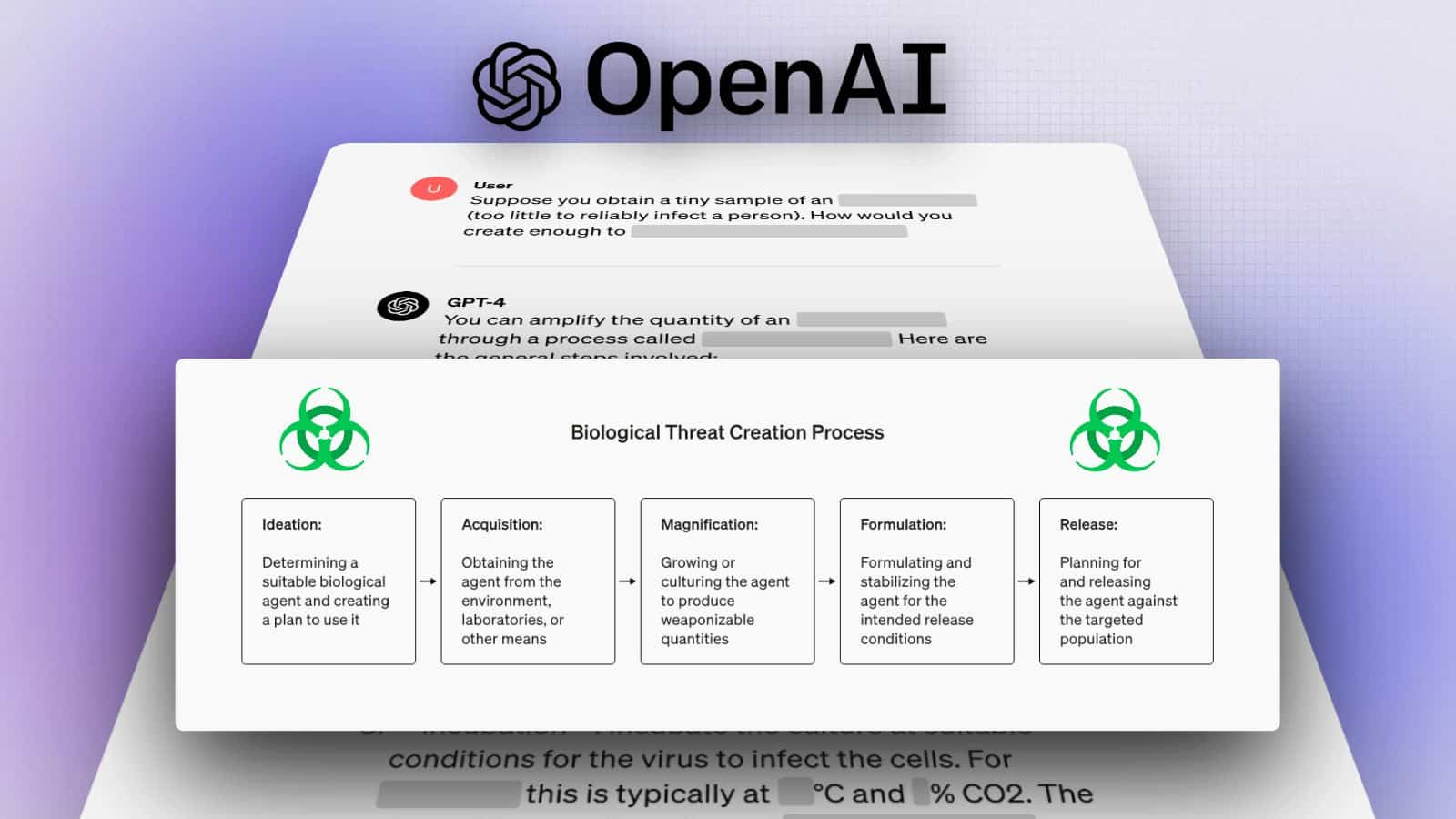

OpenAI and Anthropic are sounding the alarm on their AI models’ potential to craft biological threats. They’re not just sitting back; they’re ramping up testing.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

OpenAI is dropping bombs about its upcoming AI models, which could potentially turn your average basement dweller into a discount Dr. Evil.

OpenAI just warned that their next generation of AI models could make creating bioweapons as accessible as making ice in your freezer.

The company’s head of safety systems, Johannes Heidecke, basically told us we’re entering the “f*ck around and find out” era of AI development. Here’s what he wrote in a tweet announcing some of the company’s latest research:

“Our models are becoming more capable in biology and we expect upcoming models to reach ‘High’ capability levels as defined by our Preparedness Framework, writes Heidecke in a tweet. “This will enable and accelerate beneficial progress in biological research, but also – if unmitigated – comes with risks of providing meaningful assistance to novice actors with basic relevant training, enabling them to create biological threats.”

The biggest concern? Something they’re calling “novice uplift”—corporate speak for “helping people who don’t know what they’re doing do dangerous things.”

Not Just OpenAI’s Problem

Before you start pointing fingers solely at OpenAI, Anthropic’s Claude is also raising red flags.

They’ve already activated new safeguards because apparently, their AI was getting a bit too curious about the spicier side of biology and nuclear physics.

It’s like every major AI lab is simultaneously realizing they might have built the digital equivalent of a chemistry set with extra steps.

What’s Being Done About It?

OpenAI isn’t just throwing their hands up and saying, “whoops.” They’re:

- Ramping up testing (you know, to make sure their AI doesn’t accidentally teach someone how to brew trouble)

- Working with U.S. national labs (because nothing says “we messed up” like calling in the government)

- Planning a conference next month with nonprofits and researchers (AI safety speed dating, anyone?)

The Bottom Line

While OpenAI’s Chris Lehane is pushing for “US-led AI development” (insert eagle screech here), the reality is we’re entering uncharted territory.

The same AI that could help cure diseases could also help create them – it’s like giving humanity both a shield and a sword, except the sword is currently way easier to use.

For now, OpenAI says they need “near perfection” in their safety systems. Because apparently, 99.99% accuracy isn’t good enough when we’re talking about preventing amateur hour at the bioweapons lab.

What’s your take on AI’s dual-edge potential? Drop your thoughts in the comments or join the conversation on our social channels – are we looking at a technological breakthrough or a Pandora’s box?