Google says AI is confidently wrong (about a third of the time)

At the top of Google’s leaderboard sits its own Gemini 3 Pro, scoring 69 percent overall accuracy.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

If you’ve ever read an AI chatbot answer and thought, “Wow, that sounds smart… but is it?” Google has some numbers that may validate your anxiety.

In a refreshingly blunt self-assessment, Google just published results from its new FACTS Benchmark Suite, and the verdict is awkward: even the smartest AI chatbots today struggle to get much past a 70 percent factual accuracy rate.

Yes, that means roughly one out of every three answers can be wrong, delivered with the calm confidence of someone who absolutely did not check their work.

At the top of Google’s leaderboard sits its own Gemini 3 Pro, scoring 69 percent overall accuracy.

Close behind were Gemini 2.5 Pro and ChatGPT-5 from OpenAI, hovering around 62 percent.

Further down the chart, Claude 4.5 Opus from Anthropic landed near 51 percent, while Grok 4 from xAI scored about 54 percent. In other words, nobody’s winning a truth trophy just yet.

The FACTS Benchmark Suite, built by Google’s FACTS team in collaboration with Kaggle, was designed to test something many AI benchmarks gloss over: whether the answers are actually correct.

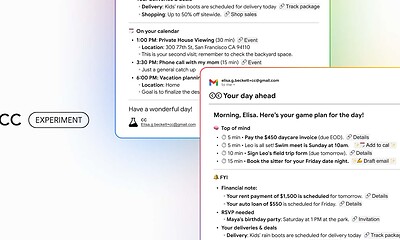

It evaluates models across four real-world areas, including raw factual knowledge, web search accuracy, grounding answers in provided documents, and multimodal understanding, like reading charts and images.

That last category turned out to be the biggest trouble spot.

Multimodal accuracy often fell below 50 percent, meaning an AI can confidently misread a chart, pull the wrong number, and move on like nothing happened.

In fields like finance, healthcare, or law, that’s not a quirky bug. It’s a potential disaster.

Google’s takeaway isn’t that AI is useless. It’s that blind trust is still a bad idea.

The models are improving, but the data makes one thing clear: until there are stronger guardrails and human oversight, chatbots are better treated as helpful assistants, not oracles of truth.