Google will pay you to break its AI (but only the right way)

Google is willing to pay up to $20,000 for your trouble (or even $30,000 if your report is extra impressive).

![[object Object]](https://knowtechie.com/wp-content/uploads/2024/06/google-search.jpg)

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

Google just put a bounty on rogue AIs, and it’s paying cash for anyone who can make them misbehave.

On Monday, the company rolled out a new AI Bug Bounty Program, a reward system aimed at catching “AI bugs” before they go all Skynet on us.

The idea is simple: if you can get a Google AI product to do something shady, like make your smart home unlock the door for a stranger, or secretly email your inbox summary to a hacker, Google wants to know about it.

And it’s willing to pay up to $20,000 for your trouble (or even $30,000 if your report is extra impressive).

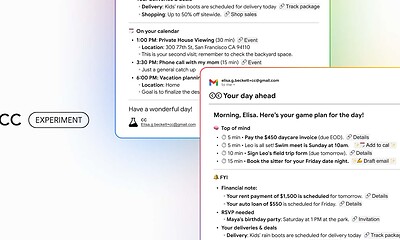

The company’s examples of “qualifying bugs” sound like the plot of a tech-thriller: a poisoned Google Calendar event that shuts off your lights, or a sneaky prompt that gets a large language model to spill your private data.

Basically, if you can make AI act like a mischievous intern with admin access, you’re the kind of person Google wants on its side.

This isn’t Google’s first flirtation with AI bug hunting. Researchers have already earned over $430,000 in payouts since the company quietly started inviting experts to probe its AI systems two years ago.

But this new program makes things official, spelling out exactly what counts as a real “AI bug” versus just, say, Gemini getting confused about what day it is. (Pro tip: hallucinations don’t pay.)

Issues like AI spouting misinformation or copyrighted material are still off the bounty list, Google says those should go through its regular product feedback channels so its safety teams can retrain the models, not reward the chaos.

To sweeten the rollout, Google also unveiled CodeMender, an AI agent that hunts down and patches vulnerable code automatically, and has already contributed fixes to 72 open-source projects.

So yes, Google wants you to break its AIs, responsibly. Just don’t expect a payout for getting Gemini to write a bad haiku.