News

Google’s Project Soli puts Minority Report’s gesture interaction to shame

Google’s next technological breakthroug, Project Soli, brings futuristic user interaction at your fingertips—literally!

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

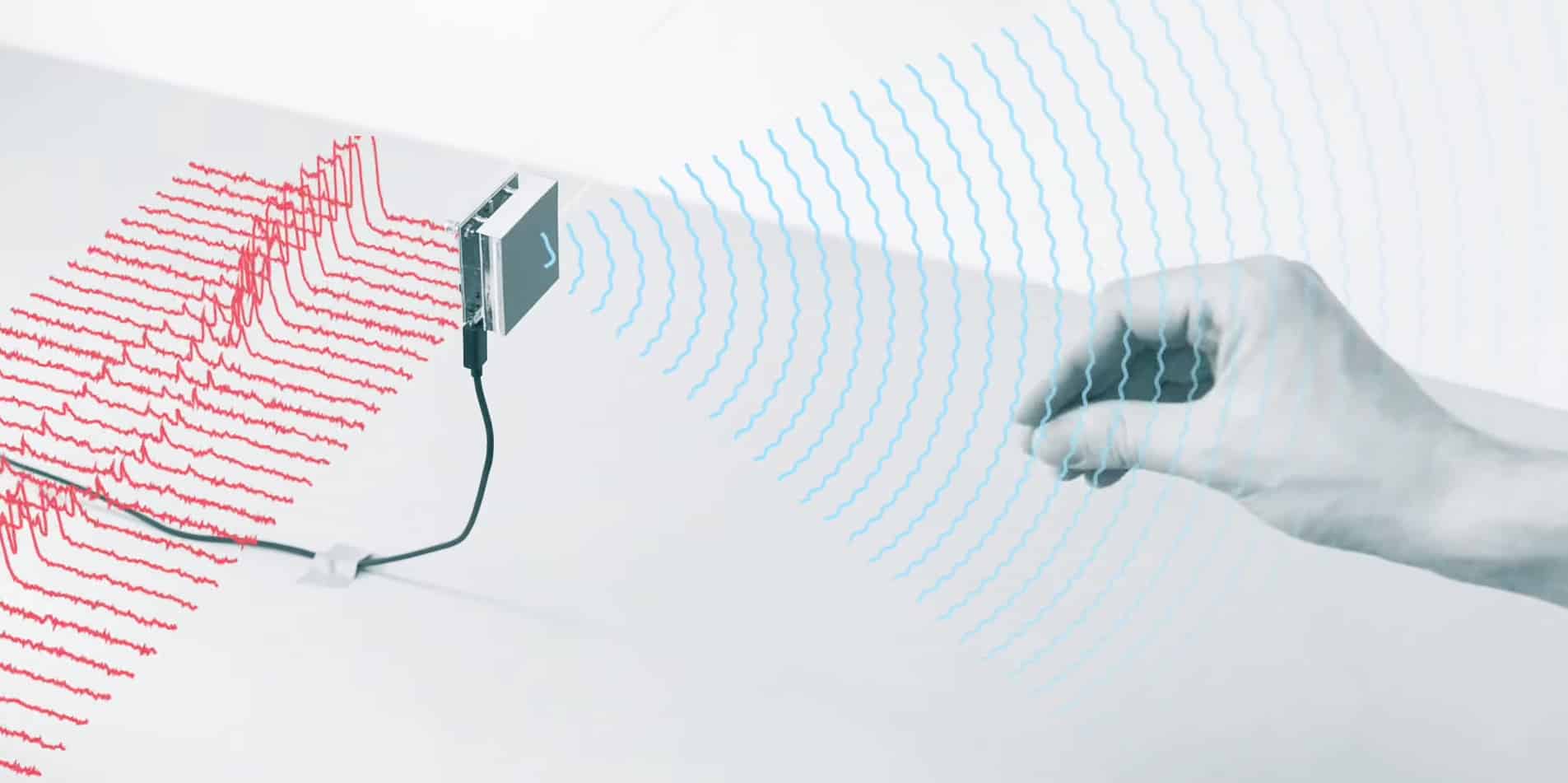

Micro-gesture recognition is the technology behind Google’s Project Soli. As complicated as it may sound, the actual technology is a mere radar that’s small enough to fit into just about anything. This radar-based sensor detects high-speed submillimetre motions that can be interpreted as user interaction.

Even though Project Soli’s demo video has a Kickstarter vibe to it, the plan seems solid and well in development. Some of the gesture recognition examples that they explain involve pressing between fingers to simulate a button, slide between your thumb and index finger to act as a slider or a scroll knob, or even using your hand to travel in a virtual space.

Ivan Poupyrev, Founder at Project Soli and Technical Program Lead at Google’s Advanced Technology and Projects (ATAP) division, explains in the demo video:

“What is most exciting about it is that you can shrink the entire radar and put it in a tiny chip. That’s what makes this approach so promising. It’s extremely reliable. There’s nothing to break. There’s no moving parts. There’s no lenses. There’s nothing, just a piece of sand on your board.”

Indeed, the usability of Project Soli’s technology is endless. You can fit it into smartphones, wearables, computers, or just about any device or electrical appliance out there.

Even so, I’m surprised that the demo video didn’t explain the accessibility solutions that this technology would provide to people who are visually impaired.