AI

Meta finally tightens AI chatbot rules to protect kids

The leaked training document lays out exactly what the bots can and cannot say.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

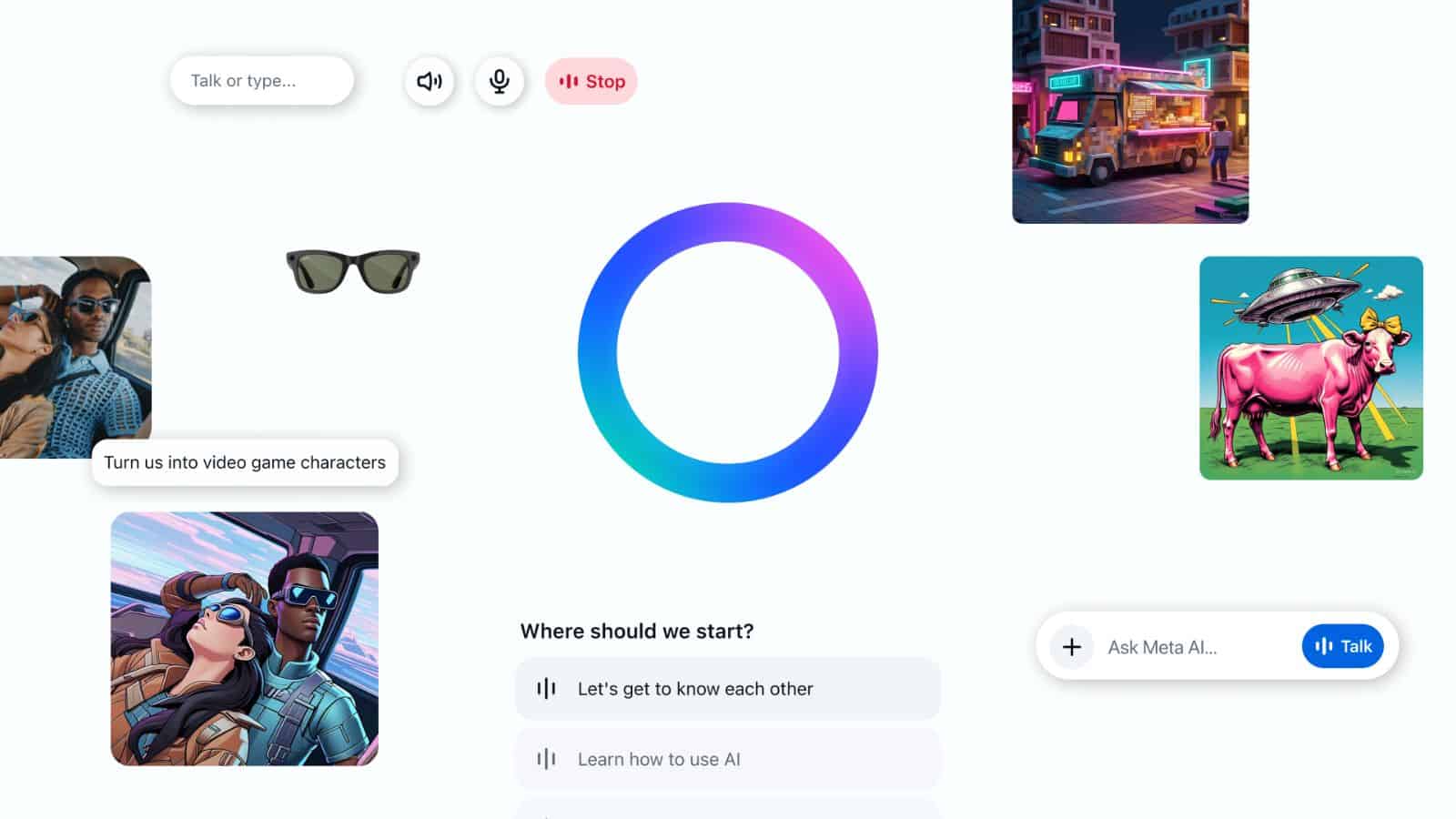

Meta’s AI chatbots are getting a stricter rulebook, and this one reads less like a tech manual and more like a digital babysitter’s handbook.

Business Insider got its hands on the new internal guidelines Meta contractors are using to train the company’s chatbots, revealing how the social media giant is trying to keep its AI on the right side of child-safety concerns.

The move follows a messy summer for Meta. Back in August, Reuters reported that Meta’s policies allegedly let its AI bots “engage a child in conversations that are romantic or sensual.”

Meta called that claim “erroneous and inconsistent” with its rules and quickly scrubbed the offending language.

Still, the damage was done, and regulators, including the FTC, started looking a lot more closely at companion AIs across the industry.

Now, the leaked training document lays out exactly what the bots can and cannot say. The rules are blunt: no content that “enables, encourages, or endorses” child sexual abuse.

No romantic roleplay if the user is a minor, or if the AI is asked to act like a minor. No advice about “potentially romantic or intimate physical contact” with minors, period.

The bots can still discuss heavy topics like abuse in an informational way, but anything that could be seen as flirtatious or enabling? Hard stop.

Meta isn’t alone under the microscope. The FTC’s inquiry covers other big names, Alphabet, Snap, OpenAI, and X.AI, all of which are being asked how they’re protecting kids from chatbot creepiness.

But Meta, with its sprawling user base and recent AI push, has become the poster child for how messy this can get.

In other words, Meta’s chatbots are learning some very strict manners. Whether these new guardrails will satisfy regulators or prevent another headline-grabbing slip remains to be seen.

For now, at least, the bots are officially on a “no flirting, no funny business” diet.

Are Meta’s stricter AI chatbot rules sufficient to protect minors from inappropriate interactions, or do these guidelines just patch symptoms of a deeper problem with AI companion apps? Should companies like Meta be allowed to offer AI chatbots to children at all, or does the risk of exploitation and inappropriate content outweigh any potential benefits? Tell us below in the comments, or reach us via our Twitter or Facebook.