AI

How computer vision learns to read the streets

AI is revolutionizing how we detect graffiti, turning maintenance into a precision operation.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

Key takeaways

- Graffiti is a planned, creative craft, not random paint on walls.

- Its bold colors and shapes make clear visual signals for cameras.

- Vision systems compare “before and after” images to spot fresh tags quickly.

- Mapped alerts help crews find the exact panel or pillar that needs cleaning.

Cities change by the hour. A fresh tag on a station pillar or the side of a train can appear between two commutes, and by the next day it might be covered or replaced.

For transit operators and maintenance teams, spotting these new markings quickly is more than housekeeping.

It’s a way to keep assets presentable, schedule cleaning crews efficiently, and understand where activity is clustering so patrols and cameras can be placed where they matter. The challenge is scale and speed.

Walls, tunnels, and fleets generate far more video and images than people can review.

That’s where modern vision systems help: Without going deep into the specs of a computer technology, let’s just say that they turn raw pixels into signals about “what’s new” and “where,” so a dispatcher can act within minutes rather than days.

This piece starts with the craft that inspires the markings—how pieces and tags are made, then shifts to the practical side: the models, datasets, and pipelines that let computers notice fresh paint on walls, subway cars, and platforms fast.

Inside the craft: the creativity and process of Graffiti artists

At the core of a memorable piece is letterform. Many Graffiti artists start by sketching a handstyle—fluid signatures that train muscle memory.

From there, they build letters with volume, shadow, and highlights, borrowing from calligraphy, comics, and sign painting.

Color choices are deliberate. High-contrast fills pop against concrete; complementary outlines make letters readable from a train window.

Caps and nozzles change line width and texture, from hairline cuts to fat fills, so a single can can produce outlines, fills, and soft blends.

And although we think that we know a lot about this form of art, a lot of things are still misunderstood or even underestimated.

Graffiti is usually associated with unwanted paintings on walls, whereas it’s a form of expression and creating something meaningful.

You get this holistic idea when you just listen to some of those artists. When RISK, who is a pioneering graffiti artist, talks about his adventures on the widely known Team Ignition podcast show, you realize how many details in this profession have been little known so far.

But let’s go a bit deep into how the process of making graffiti works, so we understand how computer technology works to detect them.

So, first of all, surface drive technique. Brick adds a tooth that catches paint differently from glazed tile or stainless steel; panels on train cars flex, so artists adapt spacing and pressure.

Stencils and rollers allow large coverage when reach matters, while freehand fades give depth. Many pieces are planned, yet the best still leave room for improvisation—responding to drips, wind, or the way a seam breaks a letter’s spine.

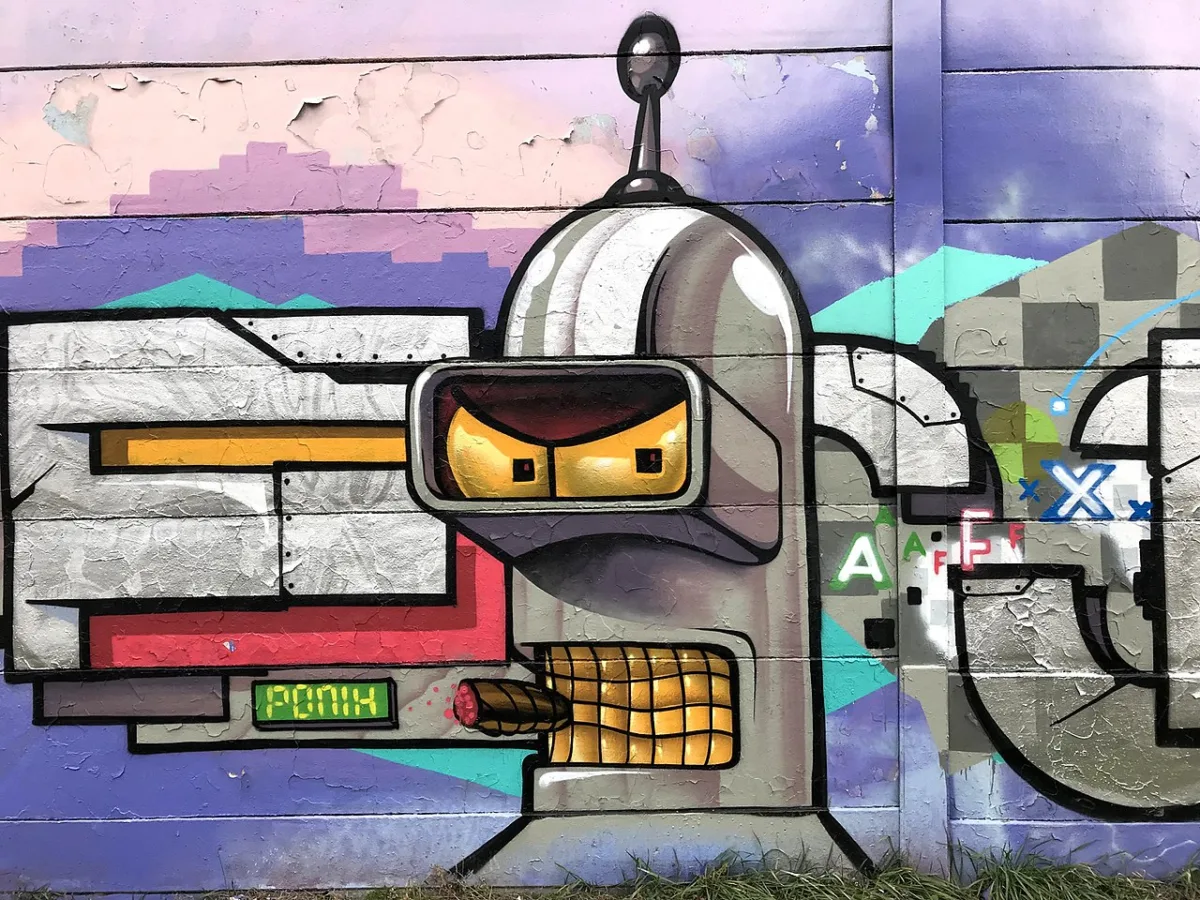

This Budapest graffiti mural depicts Bender, the iconic robot from the animated series Futurama, reimagined in a bold street-art style. The artist uses sharp black outlines, metallic shading, and glowing yellow eyes to give the character a menacing, comic-book presence.

What stands out is the creative problem-solving: turning typography into motion, making color sing on rough walls, and composing within odd frames like columns or door recesses.

Graffiti artists work under constraints—light, space, time, and still produce pieces that feel alive.

That tension between planning and improvisation is exactly what makes their marks so visually distinct, and why they’re such strong signals for cameras and algorithms later.

How vision systems spot fresh tags fast

At street level, the winning recipe is change detection plus object detection. First, systems capture periodic “baselines” for walls, tunnels, and train exteriors.

New images are aligned to those baselines using structure-from-motion and orthophoto generation, so yesterday and today line up pixel for pixel.

Once aligned, detectors isolate painted markings and compare them across time.

A widely used approach trained on façade imagery reached 88% accuracy when detecting markings on orthophotos, helped by a Faster R-CNN backbone and a carefully labeled set of 1,682 markings across 1,022 images.

The pipeline used periodic image capture, stitched façades, and automated bounding boxes to quantify where new paint appeared.

Freshness is the key metric for operations. TU Wien’s INDIGO work designed a change-detection dataset with 6,902 image pairs collected over 29 sessions along a 50-meter test site.

Synthetic “cameras” and exclusion masks make the comparisons fair and repeatable, which matters when the same wall looks different as light shifts hour to hour.

Model performance varies by task. A Lisbon study ran a two-stage system—first classifying images into three categories, then localizing the areas that needed action.

Reported results included 81.4% overall classification accuracy and a 70.3% IoU for the localization stage, showing that city-scale workflows can be built from off-the-shelf architectures plus transfer learning.

On platforms and rolling stock, these ideas translate into mounted cameras, scheduled passes, and “what changed?” alerts tied to map coordinates.

When the wall-to-wall alignment is solid, even thin letter outlines stand out against yesterday’s pixels, letting crews act before the paint layers up.

Making alerts useful in stations and depots

Turnaround time is everything on a railway. A practical pipeline ingests camera frames, stabilizes them (crucial for moving trains), normalizes color so shadows don’t confuse the model, and runs a hybrid change detector that blends pixel-level differences with feature-level matches.

In recent work validated on 6,902 image pairs, the hybrid approach reported a median F1-score around 80%, with recall 77% and precision 87%, averaging about 16 seconds per image pair on a desktop CPU.

That profile is fast enough for overnight sweeps or near-real-time station monitoring.

As one research paper summarized, “With an accuracy of 87% and a recall of 77%, the results show that the proposed change detection workflow can effectively indicate newly added graffiti.”

Scale also depends on alert quality. Large transport trials have shown that AI-assisted CCTV can generate tens of thousands of real-time alerts over a year at a single station.

That experience matters because the same queuing, throttling, and operator-handoff patterns apply when the goal is to flag new markings quickly without overwhelming staff.

FAQ

Q1: How does computer vision actually spot new graffiti?

A: Systems first capture “baseline” images of walls, tunnels, and trains. Later images are aligned to those baselines pixel by pixel, then change-detection and object-detection models highlight where new painted shapes have appeared.

Q2: What kind of models and data do these systems use?

A: Most pipelines use deep learning backbones like Faster R-CNN, trained on labeled datasets of façades and markings. They also rely on orthophotos (flattened wall images) and thousands of paired “before/after” shots so the model learns what real-world changes look like over time.

Q3: How accurate and fast are these tools in practice?

A: Recent research reports around 80–88% accuracy for detecting new markings, with strong precision and recall. On a normal desktop CPU, a well-optimized pipeline can process an image pair in seconds, which is fast enough for overnight sweeps or near-real-time station monitoring.