News

OpenAI unveils its next-generation AI model, GPT-4

It can now understand images as input and reason with them in sophisticated ways.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

OpenAI just announced the release of GPT-4, the company’s next-generation AI model.

OpenAI claims that GPT-4 is more creative and accurate than ever before, thanks to its broader general knowledge and problem-solving abilities.

GPT-4 can handle both text and image inputs, and it’s already integrated with some big players like Duolingo, Stripe, and Khan Academy.

READ MORE: Want access to OpenAI’s new GPT-4? Here’s how to get it

For those looking to give it a spin, users can get their hands on it via ChatGPT Plus, OpenAI’s $20 monthly subscription service and the Microsoft Bing chatbot is already using it.

Developers will eventually get access GPT-4 through its API, but for now, there’s a waitlist.

My favorite takeaway from this announcement is that GPT-4 can understand images and text.

For example, GPT-4 can identify and caption complex images. For instance, it can recognize a Lightning Cable adapter from a picture of a plugged-in iPhone.

Check out this example that OpenAI shared in its blog post:

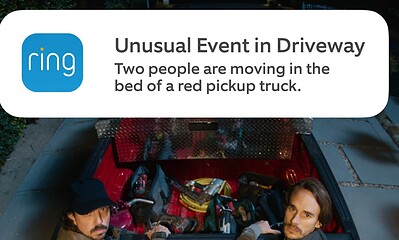

A user can ask GPT-4 a prompt like “What is funny about this image? Describe it panel by panel.”

From here, GPT-4 can analyze the images, identify what the image is about, and identify things within the image. Here’s an example of its output after analyzing the image:

Panel 1: A smartphone with a VGA connector (a large, blue, 15-pin connector typically used for computer monitors) plugged into its charging port.

Panel 2: The package for the “Lightning Cable” adapter with a picture of a VGA connector on it.

Panel 3: A close-up of the VGA connector with a small Lightning connector (used for charging iPhones and other Apple devices) at the end.

The humor in this image comes from the absurdity of plugging a large, outdated VGA connector into a small, modern smartphone charging port.

Pretty wild, right?

Of course, GPT-4 will still have its limitations. For example, it can still output false replies in an overconfident tone and “hallucinates” facts without any reasoning. But it’s improving.

“In a casual conversation, the distinction between GPT-3.5 and GPT-4 can be subtle,” the company wrote in a blog post. “The difference comes out when the complexity of the task reaches a sufficient threshold — GPT-4 is more reliable, creative, and able to handle much more nuanced instructions than GPT-3.5.”

OpenAI CEO Sam Altman previously stated improvements are more iterative than groundbreaking. “People are begging to be disappointed, and they will be,” he cautioned.

How the tech community is responding to GPT-4

While the improvements may be subtle, there’s no doubt that this AI language model will continue to make waves throughout the world.

OpenAI still has a ton of work to do, but with the release of its API, it’s now on them and the rest of the AI community to develop safe and innovative use cases for GPT-4.

Have any thoughts on this? Drop us a line below in the comments, or carry the discussion over to our Twitter or Facebook.

Editors’ Recommendations:

- This iOS shortcut syncs Siri with ChatGPT – here’s how to set it up

- GM wants to add ChatGPT to your car

- How ChatGPT is transforming music

- Microsoft’s ChatGPT-powered Bing search begins initial rollout