Apps

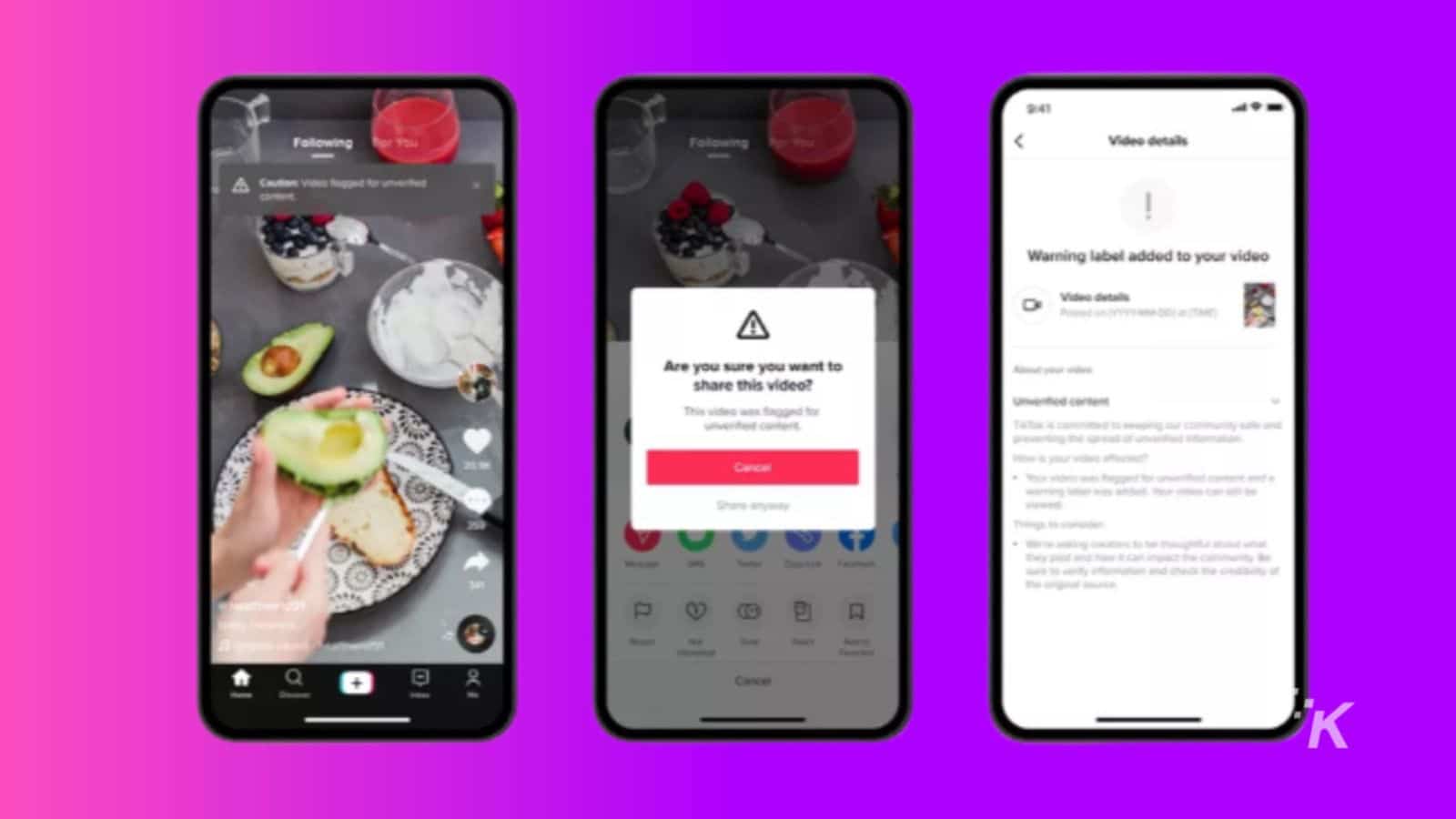

TikTok will now give you a heads up if you land on a video it thinks contains misinformation

This isn’t really a feature, but hey, we’ll take it.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

Social media platforms have had it with misinformation on their networks, and now they are finding ways to help curb the spread. Facebook does it, and Twitter does too.

If you happen to run across any of these videos, the post will display a warning label that says “Caution: Video flagged for unverified content.” This basically means one of

Naturally, TikTok will let the creator know the video they uploaded was flagged as unsubstantiated content. The weird thing is that

It’s unclear as to how

Editors’ Recommendations:

- Italy blocked TikTok after a 10-year-old girl died doing a “blackout challenge”

- TikTok users under the age of 16 will now have their accounts set to private by default

- The iPhone 12 Pro LiDAR camera finally has a use case – TikTok filters

- A 12-year-old from England has been granted anonymity to possibly fight TikTok in court