News

YouTube tightens monetization for AI-generated content

The company hasn’t released the exact wording of the new policy yet, but it posted a help article explaining the general idea.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

YouTube is about to make changes to its rules for how creators can make money on the platform, especially when it comes to AI-generated and repetitive content.

Starting July 15, YouTube will update its YouTube Partner Program (YPP) monetization policies to better define what kind of content is considered inauthentic, and therefore not eligible to earn money.

Although YouTube has always required creators to post original and authentic videos, this update aims to clearly explain what “inauthentic” means today, especially with the rise of AI tools that make it easy to generate large volumes of low-effort content.

The company hasn’t released the exact wording of the new policy yet, but it posted a help article explaining the general idea.

Some creators were initially worried that these changes might affect legitimate videos like reaction content or videos that include short clips from other sources.

But YouTube’s Head of Editorial & Creator Liaison, Rene Ritchie, clarified that these types of videos will still be allowed to earn money.

He explained that this is just a “minor update” to help spot and prevent spammy, repetitive, or mass-produced videos from being monetized, something YouTube has already tried to stop for years.

However, what Ritchie didn’t address is how much easier it is now to make this kind of content, thanks to generative AI.

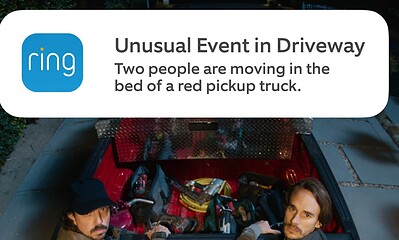

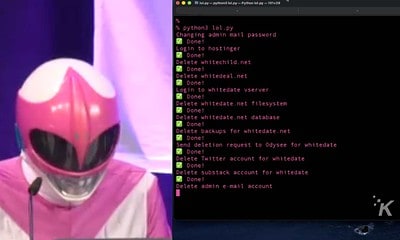

Tools can now quickly create fake news videos, voiceovers on stock footage, AI music, and even fully AI-generated storytelling, some of which rack up millions of views.

For example, one viral true crime series was found to be entirely made with AI, and there have even been phishing scams using deepfakes of YouTube’s CEO.

Even though YouTube is calling this a small policy update, the move reflects a bigger concern: if low-quality, AI-generated videos continue to flood the platform, it could hurt YouTube’s credibility and value.

These changes are likely meant to give YouTube the power to crack down on creators who are abusing AI to pump out content just for money, before it gets out of control.

Do you think YouTube’s crackdown on AI-generated content is necessary to maintain quality? Or could it unfairly penalize creators who use AI tools responsibly? Tell us below in the comments, or reach us via our Twitter or Facebook.