News

Take it Down act: What it means for deepfakes and revenge porn

It demands swift action from social media platforms to remove nonconsensual intimate images within 48 hours.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

So, President Trump just signed the Take It Down Act into law, and if you’re confused about what this means for your feeds, your privacy, or the next time a deepfake goes viral, you’re not alone.

The law isn’t just a “Trump-era” headline grabber—it’s a massive shift in how the U.S. deals with nonconsensual intimate images (NCII), including those unsettling AI-generated deepfakes, and it’s got the internet’s most chaotic corners buzzing.

What Is the Take It Down Act?

On its face, the Take It Down Act is simple: If you share or threaten to share someone’s nude or sexually explicit image without their consent—whether it’s a real photo or a deepfake—you could face up to three years in prison and a hefty fine.

Social media platforms are now on the hook, too. They must remove these images within 48 hours of being notified and hunt down copies across their networks.

The Federal Trade Commission (FTC) is the enforcer here, and platforms have about a year to get their acts together or else risk the government’s wrath.

This in-depth USA Today report also has a solid overview of the bill’s scope and passage.

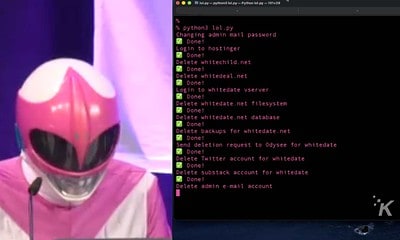

What is a Deepfake?

A deepfake is an image or video that’s been manipulated using AI to make it look like someone is doing or saying something they didn’t. They’re often used to create fake news or sexually explicit content without a person’s consent.

Why Now? And Who’s Pushing For It?

This law didn’t just materialize out of thin air. The past few years have made it painfully obvious that AI tech is turning the “revenge porn” problem into an even bigger, messier monster.

Deepfakes—AI-manipulated images and videos that can make it look like anyone’s doing anything, anywhere—have exploded, with women and minors especially at risk.

The urgency and impact of these deepfakes is captured in this analysis of the rising threat to vulnerable groups.

Melania Trump lobbied hard for this, and the bill zipped through Congress with almost no resistance.

Tech companies, parents, and youth advocates all cheered it on, as covered in this overview of the bipartisan push.

The Devil’s in the Details: How Does It Actually Work?

- Criminal Charges: Knowingly posting or threatening to post NCII (real or AI-generated) is now a federal crime.

- Platform Obligations: Social media companies have just 48 hours to remove reported content and must make “reasonable efforts” to scrub duplicates.

- FTC Enforcement: The FTC holds the stick—if platforms don’t comply, expect investigations, fines, and legal headaches.

- A Year to Prepare: Platforms have until mid-2026 to build reporting and removal systems.

If you want more on the nitty-gritty and what this means for tech companies, here’s a useful guide to the Act’s requirements.

Why Are Some Advocates Worried?

You’d think everyone would be on board, but digital rights groups are waving giant red flags.

Groups like the Electronic Frontier Foundation (EFF) and the Center for Democracy and Technology (CDT) warn that the takedown rules are so broad they could be abused to censor all kinds of content—not just revenge porn.

The law might also put encrypted messaging in the crosshairs, since platforms can’t see what’s in private messages to remove anything illegal.

This EFF analysis details how the law could impact free expression and privacy, and this Wired report unpacks how tech platforms could be forced to over-police content.

Mary Anne Franks, president of the Cyber Civil Rights Initiative (CCRI), summed up the skepticism: “This could end up hurting victims more than it helps.”

The fear is that survivors will be promised swift justice, then get lost in the bureaucratic shuffle, while platforms pick and choose which complaints to take seriously. This interview explains why some advocates think the law could give victims “false hope.”

What About Free Speech—and Trump Himself?

Trump himself quipped, “I’m going to use that bill for myself, too, if you don’t mind, because nobody gets treated worse than I do online.

Nobody.” It’s not just a joke—critics worry the law could become a tool for powerful people to silence critics or inconvenient reporting under the guise of “intimate image” abuse.

First Amendment debates are already brewing, and legal experts predict it might take years (and a few high-profile lawsuits) to figure out how far the law can really reach.

Does This Actually Fix the Deepfake Problem?

Short answer: it’s a start, but probably not the endgame.

- For Survivors: There’s finally a clear federal path to get illegal images taken down—and to hold perpetrators accountable.

- For Platforms: No more “not our problem.” They’re now required to act, fast.

- For Free Speech and Privacy: Expect lots of debate, and probably a Supreme Court case or two.

- For Deepfake Creators: It just got a lot riskier to mess with someone’s likeness.

The Bottom Line

If you’re worried about AI deepfakes or revenge porn, the Take It Down Act is a big step toward accountability.

But it’s not a silver bullet—and it might have side effects, from over-censorship to new privacy headaches. As with most things tech and law, we’ll be living in the gray area for a while.

Let me know if you want more on how platforms are prepping for these new rules, or if you need a deep dive into the privacy and free speech debates this law is about to ignite.

What do you think about this law? Do you feel safer knowing there is going to be a framework to deal with AI deepfakes and revenge porn? Tell us below in the comments, or via our Twitter or Facebook.