News

YouTube will no longer lead you down a rabbit hole of conspiracy videos

This is why we can’t have nice things.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

Good news for the easily-brainwashed, YouTube has announced that it’s making sweeping changes to its recommendation engine so you will be less likely to be sucked into a descent to madness culminating in theories about lizard people and a flat earth. Seriously people; science tells us these things are wrong. You won’t trust peer-reviewed scientists but you’ll happily trust some screaming, angry man on YouTube?

Anyway, I digress. This is a positive move from YouTube, which has long faced criticism for how its recommended videos queue works. Who knew that the best way to get people to spend even more time on YouTube was to steadily recommend pablum and drivel from Infowars and its ilk? Everyone but the YouTube team, apparently, but at least something is being done about it now.

What does this mean for viewers?

That means that a user watching one video with content that doesn’t explicitly break YouTube’s community standards, like a flat-earth theory, will no longer result in the user getting a slew of conspiracy theory videos recommended. YouTube says this change will “reduce the spread of content that comes close to — but doesn’t quite cross the line of — violating” its community policies.

Still, with YouTube not restricting the videos at all, they’re still there for anyone subscribed to the channel or if a user searches for it on their own. While it’s still a positive step, it feels like a half-step. Infowars might have been kicked off the platform for finally violating the community standards (and ban evasion) but there’s no end of copycats and smaller channels that peddle the same idiocy.

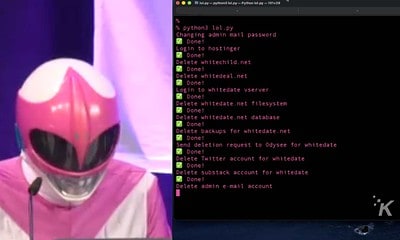

How one programmer who worked on the AI of YouTube explains it

YouTube announced they will stop recommending some conspiracy theories such as flat earth.

I worked on the AI that promoted them by the *billions*.

Here is why it’s a historic victory. Thread. 1/https://t.co/wJ1jbUcvJE

— Guillaume Chaslot (@gchaslot) February 9, 2019

One of the Googlers who helped create the AI that powers YouTube’s recommendation queue chimed in on Twitter.

In his thread, Guillaume lauds the change, talking about how the AI was built to keep users on the platform longer to serve more ad content. The algorithm then gets biased by the laser-focus of conspiracy watches, which then tries to replicate that success with normal users, presumably this is why conspiracy type videos turned up in everyone’s recommended lists.

Garbage in, garbage out, eh.

What do you think? Glad to see this content losing out on some of the spotlight? Let us know down below in the comments or carry the discussion over to our Twitter or Facebook.

Editors’ Recommendations:

- Google Maps’ augmented reality feature goes live (for some)

- Woof, Tesla cars will get a canine-friendly “dog mode” this week

- Facebook Messenger’s unsend feature is finally here

- Google is finally giving Chrome an official dark mode

- Want to be dangerously stupid on YouTube? Well, now you can’t