AI

Grief tech seems predatory as a Victorian-era séance

AI cannot wake the dead, but that won’t stop people from selling the service.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

Many so-called grief tech startups are popping up.

These companies promise the impossible, interactive digital immortality powered by AI. But using large language models to train AIs with the writings of a deceased loved one does not and cannot bring them back.

Oliver Bateman wrote an article for UnHeard about attempting to “resurrect” his dad using GPT-4.

The AI trained from thousands of emails generated text that bore some resemblance to his father’s writing, but the chat program was not the man.

The text was off in substance and style—the typo-laden, idiosyncratic human writing input was output as clean, clear sentences.

For Futurism, Maggie Harrison chronicled an experience with a startup called Seance AI.

Like Bateman, Harrison interacted with a poorly reflected copy of her father. The chat program ultimately just echoed her messages, a partial active listening technique, telling her what she had just said.

AI’s have simple objectives of satisfying a user. Large language models are prone to sycophancy and sandbagging. AIs will answer subjective questions, flattering their users stated beliefs and endorsing common misconceptions when users appear uneducated.

Grief tech is a use case especially likely to trigger AI sycophancy and sandbagging. After all, the AI is trying to not only pass the Turing test but pass it as someone’s deceased loved one.

As exemplified by thousands of years of mentalism and claims of communicating with lost souls, love is blind, and grief is blinding.

The late James Randi devoted much of his life to debunking those who claimed otherworldly powers to profit off those desperate for hope.

In the 1980s, Randi exposed Peter Popoff as a fraud on “The Tonight Show Starring Johnny Carson.” However, Popoff stayed in the fraud business decades after that broadcast because desperate people can convince themselves of anything.

People often cope with grief by lying to themselves. It’s very easy to believe a comforting lie, especially when vulnerable. Those lies can prevent us from moving on.

In her 1969 book “On Death and Dying,” Elisabeth Kübler-Ross labeled five stages of grief. Those stages are denial, anger, bargaining, depression, and acceptance. Often with some modification, the Kübler-Ross model is still in use in 2023.

In the Kübler-Ross model building an AI chatbot of a loved one probably falls under denial or bargaining.

Andrea Eberle, a Licensed Clinical Social Worker (disclosure Eberle is my significant other), likened grief tech to the Mirror of Erised from “Harry Potter and the Sorcerer’s Stone.”

The AI is a mirror that shows the user their heart’s deepest desire. It may help some along the path of grieving, but it will leave others stuck in an unfulfilling pattern.

Nearly every grief tech startup pitching digital metaphysics is powered by OpenAI’s API.

Given that the tech industry, in general, and startups, in particular, are full of con artists. It’s hard not to see those who build and market grief tech as startup bros exploiting anguish.

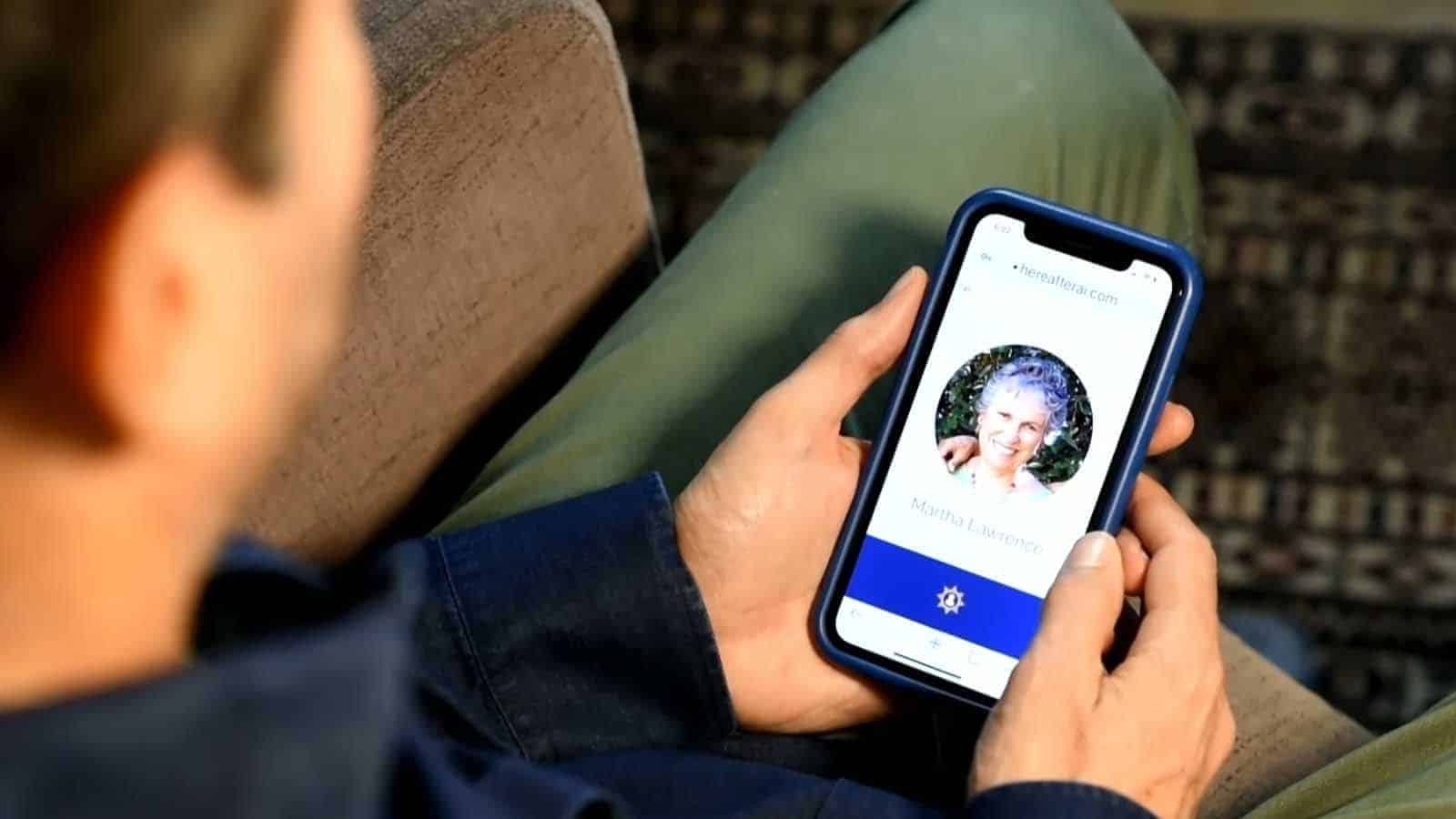

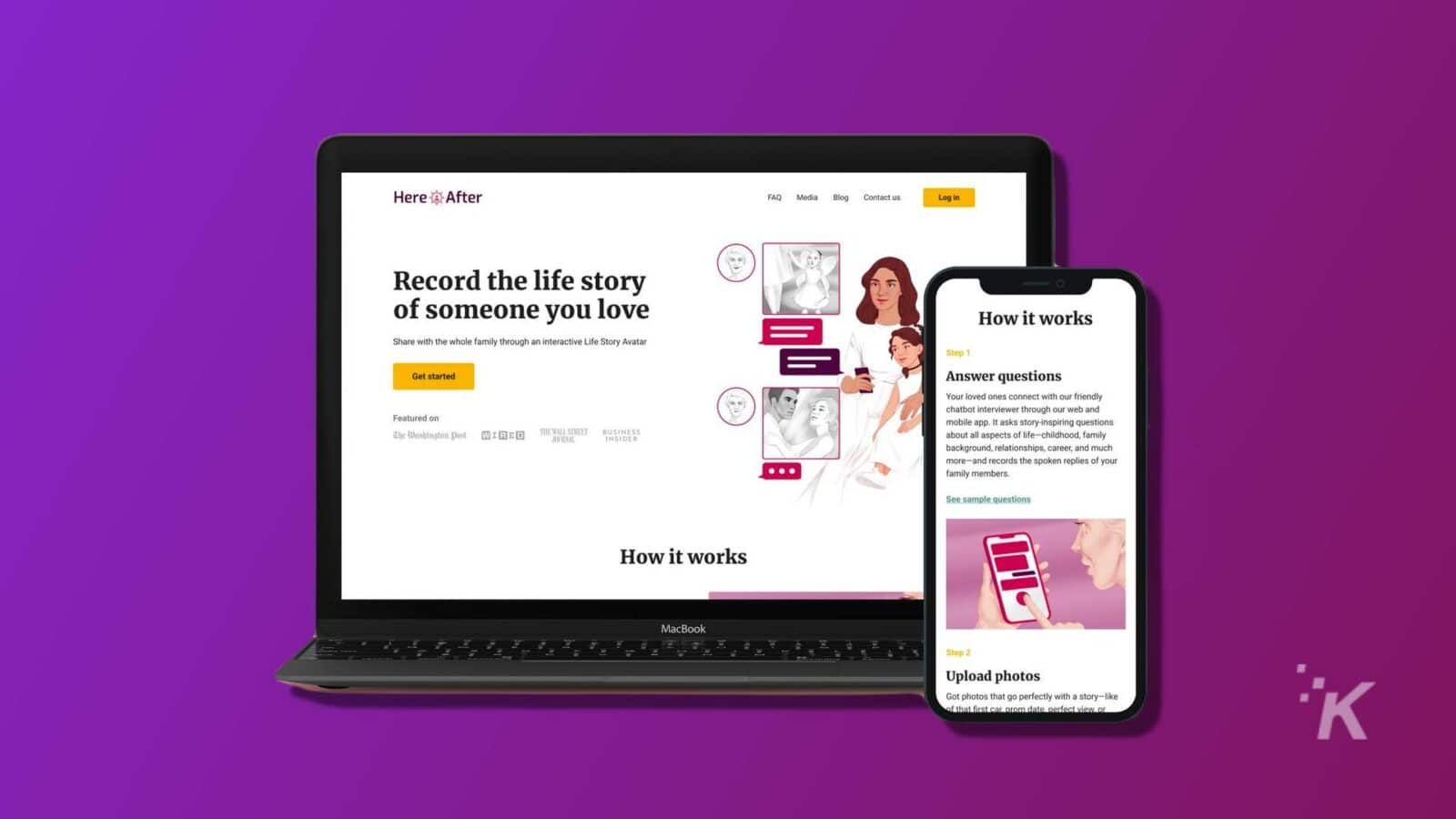

However not every startup seems blatantly predatory. A startup HereAfter AI seems less of an attempted resurrection and more of an interactive museum.

James Vlahos, who founded HereAfter AI, did so after creating his own “dadbot.” When Vlahos learned of his father’s terminal lung cancer, he aggregated memories that would become his dad’s digital avatar.

Constructing the chatbot may help some along the grieving process. We look at photos and read old handwritten recipe cards already. These AIs could serve as another way to remember those we love.

But as advertised now, most grief tech seems as predatory as the Victorian-era seance.

Have any thoughts on this? Drop us a line below in the comments, or carry the discussion over to our Twitter or Facebook.

Editors’ Recommendations:

- Amazon wants to make Alexa sound like your dead relatives

- Microsoft wants to revive your dead loved ones as chatbots and maybe holograms

- Delete these fake ChatGPT apps; they’re ripping you off

- Microsoft doubles down on Bing AI with new iOS and Android features