Apps

Google Lens is getting even better when using images for search

Google Lens and Search are getting some big updates.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

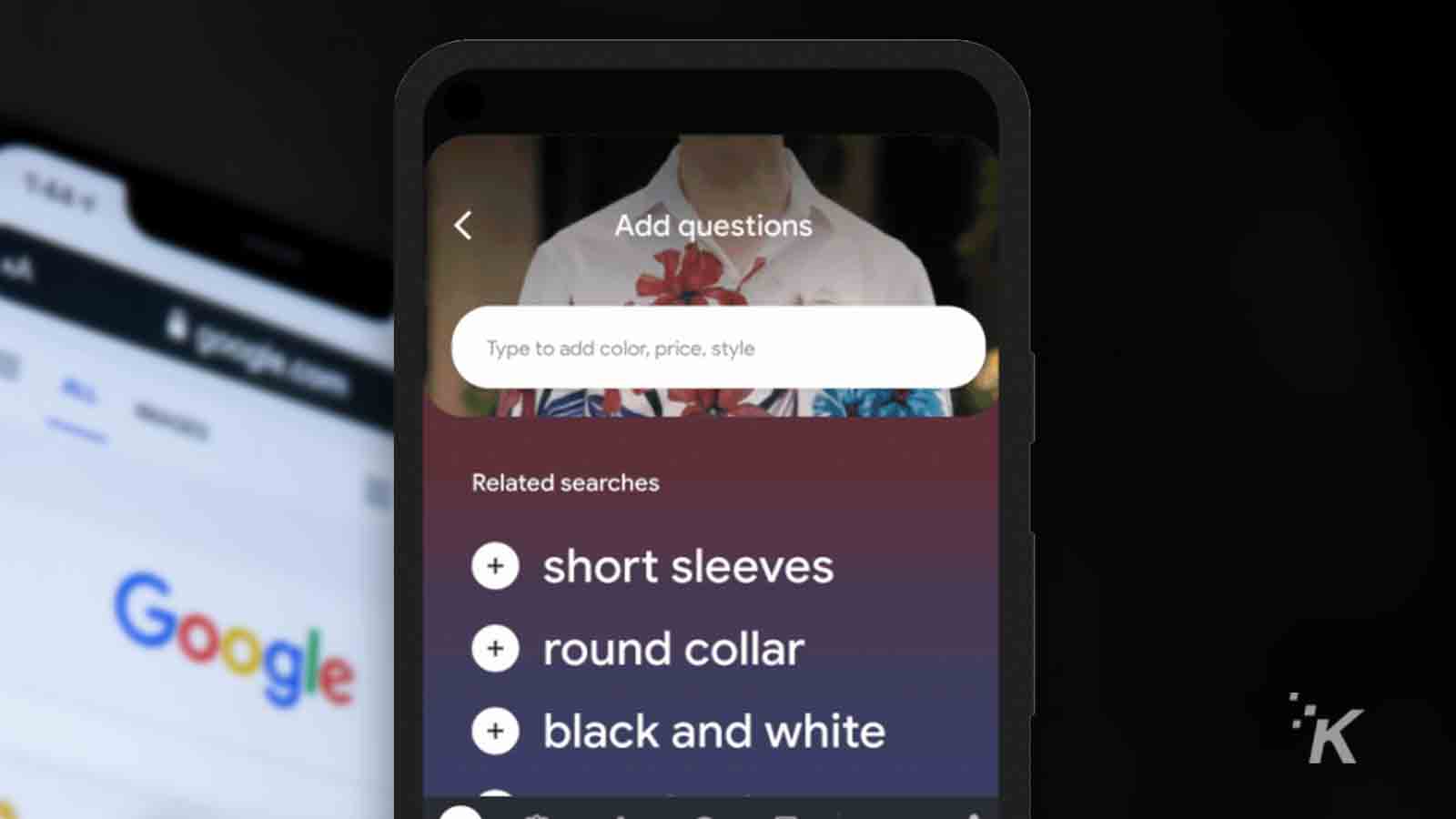

Google Lens is about to get a new update that will supercharge its search prowess. It’s called multimodal search, which is really a fancy way of saying that you’ll be able to narrow the search by adding text after you’ve used Lens to take a picture of something.

This new Google Lens feature is going to come in clutch for how-to content or repair videos, as you can take a snap of whatever is broken, then add “repair” or “how-to” for the additional context for Google to search for.

It’ll be just as handy for shopping, so you can narrow things down to the specific type of clothing you’re looking for, instead of having to scroll through hundreds of assorted shirts to find the socks you were actually searching for.

Google is also working on a new “Lens mode” which will be added to the iOS Google app. That will make it so users can start a search from any image they see while already browsing the web. Google says it’ll be coming “soon,” whenever that means, but it’ll be US-only, to begin with.

Lens will also get added to the desktop Chrome browser, which will work like Tin Eye or any of the other reverse-image search tools, letting users search from any image or video they see when browsing, without leaving the tab they’re on. That’s going to be a global roll-out, when available “soon.”

Google Search is also getting some new tricks, with a “Things to know” section added to Search when you’re looking for new topics. This will have things like how-to content, tips, and associated topics that you might not have thought to search for.

All of these updates are coming from Google’s work with their new Multitask Unified Model, or MUM for short. Think of it as a way to use Search as if you’re also talking to an expert in your chosen search topic, so you’ll get relevant, nuanced answers to your queries.

We’ll have to wait and see how well it works once available, but it’s exciting thinking of computers being able to answer as if they’re human, instead of simply regurgitating facts.

Have any thoughts on this? Let us know down below in the comments or carry the discussion over to our Twitter or Facebook.

Editors’ Recommendations:

- PNG Parser will completely change an image depending on what device you’re on

- Google Meet is making it easier to have video calls with people that speak a different language

- Google just brought a bunch more Pixel features to other Android phones

- It looks like Apple killed off some of Siri’s commands with iOS 15

- Android users: Nearly 10 million of you have downloaded apps that steal money – here’s what to know