Can privacy and personalization coexist?

So can privacy and personalization coexist? Absolutely. But a better question is: When are we going to demand both?

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

Fresh off the 2013 Snowden leaks, the internet’s collective attention turned to mass data collection practices and the company’s that employed them. Our views started to harden, presenting the first large scale shift in consumer sentiment regarding data collection by online companies.

This presented a bit of a paradox. Now aware of the threat we faced, we were forced to pick a side. For some of us, that meant shifting our time and attention away from the most egregious offenders. For others, it was a blip on the radar, one where we found ourselves aware of what was happening, but ignorant as to what could be done about it. We lost interest and eventually threw up our hands in resignation. Consumers have always been demanding, but now we had a rather impossible problem to solve. We wanted the same, personalized user experience we’d become accustomed to, yet we no longer felt comfortable handing over the data required to get it.

This is the tip of the iceberg, but it poses an interesting question about privacy and personalization. Every major service we use today, from Google search to Facebook, or

According to a couple of recent studies, we’ve reached a tipping point. In the US, for example, 81 percent of Americans feel they have very little or no control over data collection. Globally, 84 percent of internet users want to do more to protect their privacy, yet more than half say they don’t know-how.

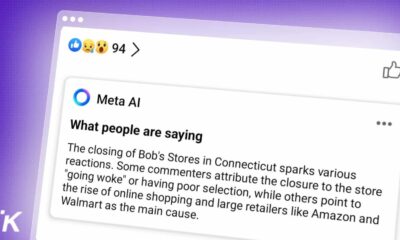

Facebook, for example, has come under scrutiny over the past half-decade for a number of privacy scandals, including one massive breach in which Cambridge Analytica attempted to turn an election using data it obtained legally, though Facebook said it shouldn’t have had access to.

TikTok is on the verge of a ban in the United States if it can’t find a deal to sell its US operations to a trusted company that keeps it from sending user data to China.

The good news is that digital literacy is on the rise. People are, for the first time in the digital age, thinking through their decisions at scale. And while we’re not where we need to be just yet, it’s undeniable that we’re making progress.

As the public becomes more educated about what’s being collected, and by whom, we’re seeing increased demand for solutions built on platforms designed around privacy.

Years back, we saw an evolution in the way businesses thought about reaching their customers. “Mobile first” was all the rage, until it later became ubiquitous. Today, nearly every app, or website, is built with a mobile-first approach. Today we no longer hear the term in use. It’s assumed. It no longer needs to be stated that a digital company is focused on mobile users. In fact, it would be foolish not to place the bulk of their focus here, as most digital consumers access the web primarily from their mobile devices.

Today we’re seeing the same shift in privacy-focused alternatives to major services. As we educate the public about data security, companies are forced to adapt, building in privacy as a foundational element through UX design. Privacy is no longer reactionary, it’s ingrained into the DNA of forward-thinking companies.

We’re getting there. Federated learning, for example, has given rise to a privacy-centric approach to personalization. A search engine, for example, would still collect your data, but it would never leave the device you used to perform the search. Rather than sending it to a central server for analysis and to be used in future AI training, the data stays with you, and personalization algorithms train themselves without ever sharing your most personal information.

In the privacy vs personalization debate, it’s really the best solution we have moving forward, one that protects consumers while still delivering the experiences they’ve come to expect. And it will only get better with time, and increased use.

Beyond technology, we need a shift in the way we view privacy. Companies need to think big picture early on. They need to anticipate problems before they happen.

Currently, we’re living in a world where consumer outrage leads to reactionary measures that add security protocols only after consumers demand them. All too often, businesses rely on a siloed approach that builds pieces of software in isolation. Rather than interfacing among teams, privacy and security professionals focus on their tasks while those responsible for programming, design, marketing, and the like are all worried about something else.

To work, privacy needs to be built into every element of the application, and the earlier the better.

These privacy-focused solutions empower users and start to chip away at the status quo. It’s only after we’ve come to expect privacy, that we can begin demanding it in every application we use.

Once there, trust in systems, and companies, improve. Corporations are forced to adapt to ethical solutions or risk consumers willing to seek better options elsewhere.

So can privacy and personalization coexist? Absolutely. But a better question is: When are we going to demand both?

Editor’s Note: Leif-Nissen Lundbæk (Ph.D.) is Co-Founder and CEO of the technology company XAIN AG. His work focuses mainly on algorithms and applications for privacy-preserving artificial intelligence. The Berlin-based company aims to solve the challenge of combining AI with privacy with an emphasis on Federated Learning.

XAIN won the first Porsche Innovation Contest and has already worked successfully with Porsche AG, Daimler AG, Deutsche Bahn, and Siemens. Before founding XAIN Leif-Nissen Lundbæk has worked with Daimler AG and IBM. He studied Economics at the Humboldt University in Berlin, received his M.Sc in Mathematics at Heidelberg University, an M.Sc. with distinction in Software Engineering at The University of Oxford and obtained his Ph.D. in Computing at the Imperial College London.