Tech

Move over Bob Ross, Nvidia’s new AI turns your sketches into masterpieces

No, really, it’s that good.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

One of the unique features of the human condition is our urge to create art. It’s not found anywhere else in nature, although now thanks to Nvidia it might reside inside a computer. The latest AI-driven software from the chipmaker can create art from the simplest of sketches.

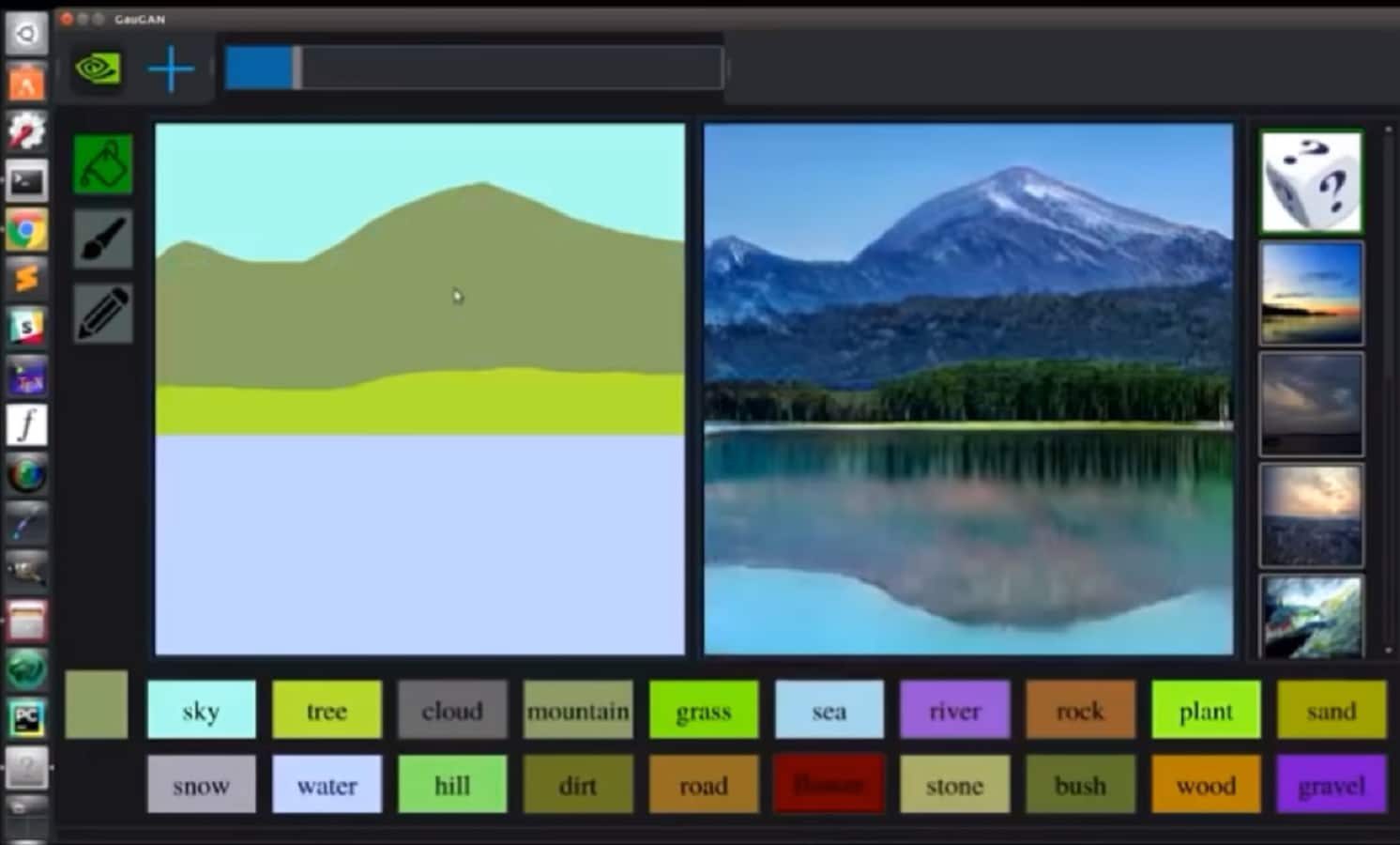

Called GauGAN, a nod at the French post-Impressionist painter mixed with the Generative Adversarial Network (GAN) that powers it, this software is basically a “smart paintbrush,” as Nvidia describes it.

Without culture, there really can’t be art, as we know it, because art cannot exist separate from culture. Art reflects culture, transmits culture, shapes culture, and comments on culture.

Okay… you might be wondering why I’m putting a quote about art and culture into this discussion on AI. It’s a parallel to the process that the GAN uses to create its final product. Just as art and culture are intertwined, so are the two trained neural networks working in tandem to create the final images.

Once a human operator sketches some simple shapes that are tagged for landscape features such as sky, tree, grass or river, the first network, the generator, creates what it thinks is a landscape. That landscape gets passed to the second network, the discriminator, which then compares it to the original dataset full of landscape data. It then decides if it’s art or not, either passing the image back to the generator to redo, or displaying it to the screen.

Check out Nvidia’s AI-driven smart paintbrush

Bryan Catanzaro, vice president of applied deep learning research at NVIDIA, thinks of the GauGAN program as “like a coloring book picture that describes where a tree is, where the sun is, where the sky is.” It might be far more complicated and powerful than those paint-by-numbers pictures you did back in kindergarten, but the underlying principle is the same. The user tags areas of the frame as features, essentially numbering those regions for the software to fill in the texture.

It’s pretty darn good too, knowing to put reflections onto bodies of water or changing foliage if you change features like “grass” to “snow.” This feels like a much better use of the GAN routines, certainly more palatable than the other examples of the technology in use like deepfakes or ThisPersonDoesNotExist.

Sadly, we can’t try it out just yet, but if you’re at the GPU Technology Conference this week, you can get some hands-on time with it.

What do you think? Interesting in trying this tech from Nvidia out? Let us know down below in the comments or carry the discussion over to our Twitter or Facebook.

Editors’ Recommendations:

- Google gets a slap on the wrist and a $1.69 billion fine in AdSense antitrust case

- Disney finally buys all the good bits of 21st Century Fox

- This video shows the hideous crease that could appear with Samsung’s folding phone

- Instagram adds in-app shopping to complete the circle of life

- Google Stadia will bring game streaming to the masses (hopefully)