Watch Facebook’s whistleblower step forward in damning new 60 Minutes interview

Everything truly is terrible over at Facebook.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

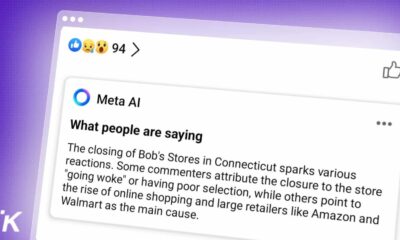

Over the past couple of weeks, you might have seen one of the many damning reports to come from the Wall Street Journal in regards to Facebook. From teen girls’ mental health to drug trafficking problems, WSJ was dropping the mic on a variety of subjects.

Well, it turns out much of that information came from Frances Haugen, a former Facebook employee turned whistleblower who took loads of internal information from the company before filing complaints with federal agencies.

Now, she’s speaking out on 60 Minutes and it’s absolutely as bad (or maybe worse) as you thought. Check it out below:

In the interview, she talks about many things, including how Facebook has a conflict of interest between its users and making money. Essentially, the longer you are engaged, the more money Facebook makes. And how do you keep people engaged longer? By showing them divisive content.

She also took reports that looked at how Facebook is lying to the public about making significant progress against hate. One report stated, “We estimate that we may action as little as 3-5% of hate, and ~.06% of V&I [Violence and Incitement] on Facebook.” This report was from this year.

Naturally, Facebook saw the 60-Minute special and issued a response. Lena Pietsch, Facebook’s director of policy communications, responded to 60 Minutes’ report, with the following statements:

“Every day our teams have to balance protecting the right of billions of people to express themselves openly with the need to keep our platform a safe and positive place. We continue to make significant improvements to tackle the spread of misinformation and harmful content. To suggest we encourage bad content and do nothing is just not true.”

In response to the claim that internal research suggests the company is not doing enough to combat hate, misinformation, and conspiracy:

“We’ve invested heavily in people and technology to keep our platform safe, and have made fighting misinformation and providing authoritative information a priority. If any research had identified an exact solution to these complex challenges, the tech industry, governments, and society would have solved them a long time ago. We have a strong track record of using our research — as well as external research and close collaboration with experts and organizations — to inform changes to our apps.”

In response to how the company outweighs user safety for profit:

“Hosting hateful or harmful content is bad for our community, bad for advertisers, and ultimately, bad for our business. Our incentive is to provide a safe, positive experience for the billions of people who use Facebook. That’s why we’ve invested so heavily in safety and security.”

In response to the claim that Facebook puts more eyes on polarizing and hateful content:

“The goal of the Meaningful Social Interactions ranking change is in the name: improve people’s experience by prioritizing posts that inspire interactions, particularly conversations, between family and friends — which research shows is better for people’s well-being — and deprioritizing public content. Research also shows that polarization has been growing in the United States for decades, long before platforms like Facebook even existed, and that it is decreasing in other countries where Internet and Facebook use has increased. We have our role to play and will continue to make changes consistent with the goal of making people’s experience more meaningful, but blaming Facebook ignores the deeper causes of these issues – and the research.”

In response to the claim that Facebook became less safe after safety measures were put in place, then rolled back:

“We spent more than two years preparing for the 2020 election with massive investments, more than 40 teams across the company, and over 35,000 people working on safety and security. In phasing in and then adjusting additional emergency measures before, during and after the election, we took into account specific on-platforms signals and information from our ongoing, regular engagement with law enforcement. When those signals changed, so did the measures. It is wrong to claim that these steps were the reason for January 6th — the measures we did need remained in place through February, and some like not recommending new, civic, or political groups remain in place to this day. These were all part of a much longer and larger strategy to protect the election on our platform — and we are proud of that work.”

Make sure to watch the full interview above, as that is just the tip of the iceberg. What happens now? Time will tell, but this whole thing is just getting started.

Have any thoughts on this? Let us know down below in the comments or carry the discussion over to our Twitter or Facebook.

Editors’ Recommendations:

- The WSJ investigation into Facebook could finally land Mark Zuckerberg in some deep trouble

- Celebrities on Instagram make people feel like shit according to a new study

- Facebook really wants to make money off of your kids

- Twitter opens up its new tipping feature for just about everyone and you can even send Bitcoin