Facebook is finally promoting reputable news sources, but don’t expect it to last

The results are good for the world, but bad for Facebook.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

If you’ve been on Facebook over the past couple of months, you’ve likely noticed how misinformation and downright lies are rampant on the platform. Now, it seems the company is looking to promote reputable news sources in its feed, but that it will only do it for a limited time.

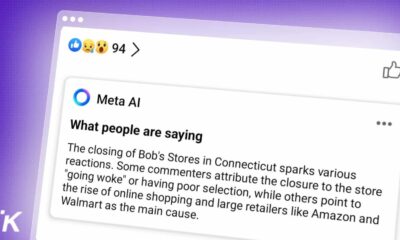

In a report from The New York Times, as the election geared up, employees urged Facebook to tweak its “news ecosystem quality” scores, or N.E.Q. N.E.Q. scores are an internal ranking system that Facebook uses and it’s “based on signals about the quality of their journalism.”

These scores play a role in how news is displayed on people’s feeds, but it generally is weighted pretty low. After the blatant spread of misinformation came to a peak in the days after election day, Facebook decided to start making use of this score. In turn, outlets like CNN and NPR were more heavily promoted, while partisan sites like Occupy Democrats and Breitbart were pushed down in feeds.

NYT notes that this change “was a vision of what a calmer, less divisive Facebook might look like,” but, in the time since, Facebook has made it clear that the change isn’t permanent. Guy Rosen, who oversees the integrity division at Facebook, noted during a call with reporters that the change was temporary and it was never intended as a permanent fix.

It is generally assumed that Facebook is hesitant to make these changes permanent because it might end with users spending less time on the platform. In fact, NYT reports that Facebook uses experiments called “P(bad for the world)” that ask users about high-reaching posts and if they were good or bad for the world. Alarmingly, many more posts were considered bad, than good. Tweaking the algorithm to show these “bad for the world” posts less resulted in users opening the app less.

Which leads us to the proverbial cherry on top. According to NYT, a summary of the results. which was posted to Facebook’s internal network, lead the company to tweak the algorithm, making it less aggressive in demoting “bad for the world” content.

So, basically, Facebook only cares about what’s bad for the world if it doesn’t affect user metrics.

What do you think? Surprised by this? Let us know down below in the comments or carry the discussion over to our Twitter or Facebook.

Editors’ Recommendations:

- Facebook doesn’t really plan on changing how it deals with Donald Trump

- Facebook’s new Vanish mode brings disappearing messages to Messenger and Instagram

- YouTube has suspended and demonetized One America News Network

- Twitter is going to discourage users from liking tweets that have been flagged for misinformation