Facebook now lets you upvote and downvote News Feed content

By giving users greater choice in the content they see, it shifts responsibility (and, yes, blame) from the News Feed algorithm.

Just a heads up, if you buy something through our links, we may get a small share of the sale. It’s one of the ways we keep the lights on here. Click here for more.

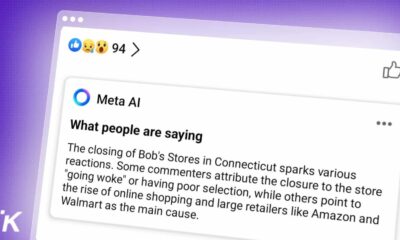

Meta today announced major changes to the Feed (formerly the News Feed). Facebook users can now directly influence the content they see by “upvoting” and “downvoting” posts.

The Facebook News Feed is a mix of posts from friends and family and “suggested” content from random pages and creators.

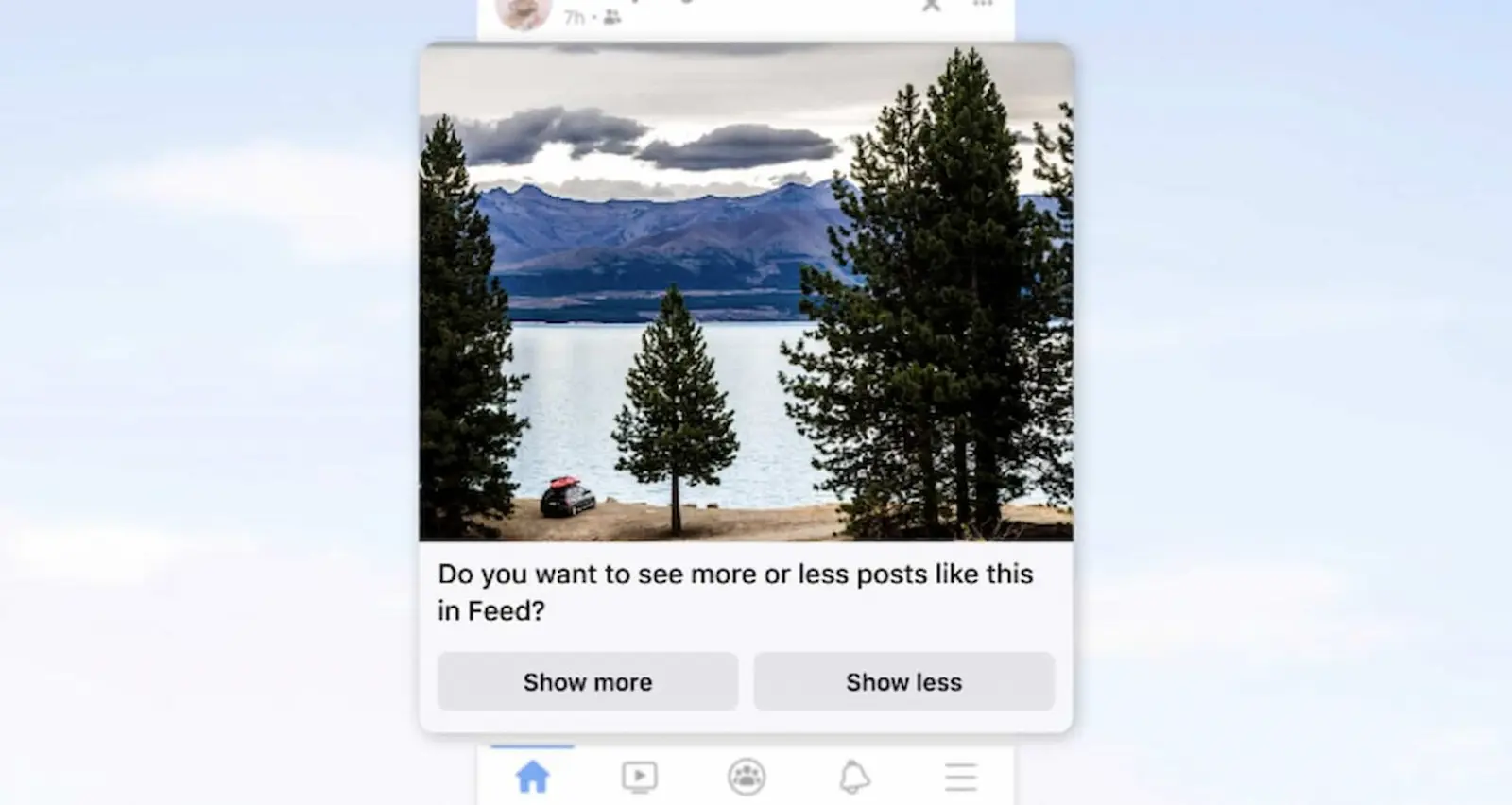

Starting today, users can tell Facebook to show less or more of a particular piece of content. Here’s how it works.

Giving Facebook Feedback

At first, Facebook will prompt users to provide feedback. The company plans to include a voting mechanism with all suggested posts soon.

Meta says the feedback will have a “temporary” effect on how the Facebook News Feed algorithm surfaces suggested content. It’s unclear how long these effects will last.

The company also plans to introduce an overhauled News Feed settings window. This will allow users to dictate the frequency in which certain kinds of posts appear in their timeline.

In practice, users will be able to increase the frequency in which personal status updates appear while decreasing the prevalence of posts from public figures and pages.

Taking Control

The newsfeed is Facebook’s equivalent of prime real estate. It wasn’t always so.

Its first incarnation was blissfully simple. It showed the latest status updates from friends, family, and casual acquaintances.

Over time, Facebook engineered the News Feed to increase engagement and deliver hyper-relevant ads.

This move wasn’t without controversy. Misleading and ultra-partisan content displaced selfies and life updates. Filter bubbles hardened.

Journalists and political elites decried Facebook as a risk to democracy.

It was no longer a plucky tech giant forged from a Harvard dorm room. It was an unthinking company prepared to sacrifice civil discourse and facts on the altar of virality.

Following the 2016 US Presidential Election, Facebook has battled to repair its tarnished image. The latest update is yet another step in that direction.

This is a good step. But there’s a cynical angle to be found here.

By giving users greater choice in the content they see, it shifts responsibility (and, yes, blame) from the News Feed algorithm.

Have any thoughts on this? Carry the discussion over to our Twitter or Facebook.

Editors’ Recommendations:

- How to contact Facebook customer support

- Meta tests way to quickly switch between Facebook and Instagram

- Donald Trump could return to Facebook early next year

- Facebook’s new Community Chats feature is basically Discord